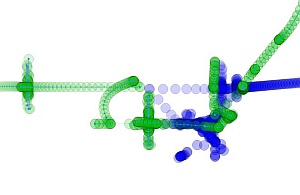

Time-lapse view of a video intended to show a fight between a blue and a green object

© David Pautler and Bryan Koenig

Humans can posit the intentions and predict the actions of others through observation of movements and behaviors. This cognitive ability, known as theory of mind, has important implications in personality development and social interaction. Despite its significance, however, scientists are only beginning to understand how people use motion information to perceive intentions. For instance, recent studies have demonstrated that a simple animation of moving shapes is sufficient to convey intentions.

David Pautler at the A*STAR Institute of High Performance Computing and co-workers have now developed an artificial intelligence system that models how people recognize the intentions of others. The system could help computer scientists to develop computerized systems that detect and recognize human actions.

Pautler’s group built on a technique called incremental chart parsing, developed by computer scientists and linguists to analyze the structure of text, and modified it to develop a system that analyzes the movements of shapes and ascribes to them intentions, based on small sets of rules.

They then created animations using Adobe Flash software — in one, object X moves away from V and towards Y and Z, but as it nears Y it suddenly arcs around Y and continues until stopping near Z (see video). This is ambiguous early on but resolves in an “A-ha!” moment with a single explanation at the end.

Three of the explanations attributed intentions to X: it intends to move further away from V, closer to Y, or closer to Z. The other two attribute the movements to physical forces that either repel it from V or attract it to Z. The model was designed to guess any of these explanations at any time, whenever the visual cues suggest them.

The researchers recruited 38 volunteers and asked them to watch the animation, confirming that they perceive the animation as predicted by the model. Thirty-five others watched another animation, in which object X moves in a straight line at a constant speed. Far fewer ascribed any intention to the object.

Experiments such as these provide a guide as to what kinds of explanations the model needs, and the types of visual cues that trigger each kind of explanation. Pautler and his team are now developing the system further by formulating more rules to refine the process by which it makes predictions.

“Computer vision systems can detect a person’s presence and track him, but these systems don’t explain what the person is doing or why he might be doing it,” says Pautler. “If we could combine computer vision capabilities with an ability to attribute intentions to what is seen, that would be useful in surveillance, robot assistance for the elderly and many other applications.”

The A*STAR-affiliated researchers contributing to this research are from the Institute of High Performance Computing.