Anyone who has used Google Translate to decipher text in a foreign language knows that the result can often be more amusing and puzzling than informative. Artificial intelligence-based systems called neural machine translation (NMT) are here to the rescue, improving the accuracy of automated translation applications. At a time when international connections have never been more important, robust NMT systems have the potential to bring us closer together.

However, according to the experts, we’re not quite there yet. “One of the major limitations of existing NMT models is that they are data-hungry,” explained Xuan-Phi Nguyen, a Ph.D. candidate at Nanyang Technological University and an A*STAR Computing and Information Science Scholarship recipient.

For NMTs to translate accurately, they require large amounts of training data. This is a particular hindrance for low-resource languages such as Malay, Nguyen added, for which translation datasets are relatively scarce.

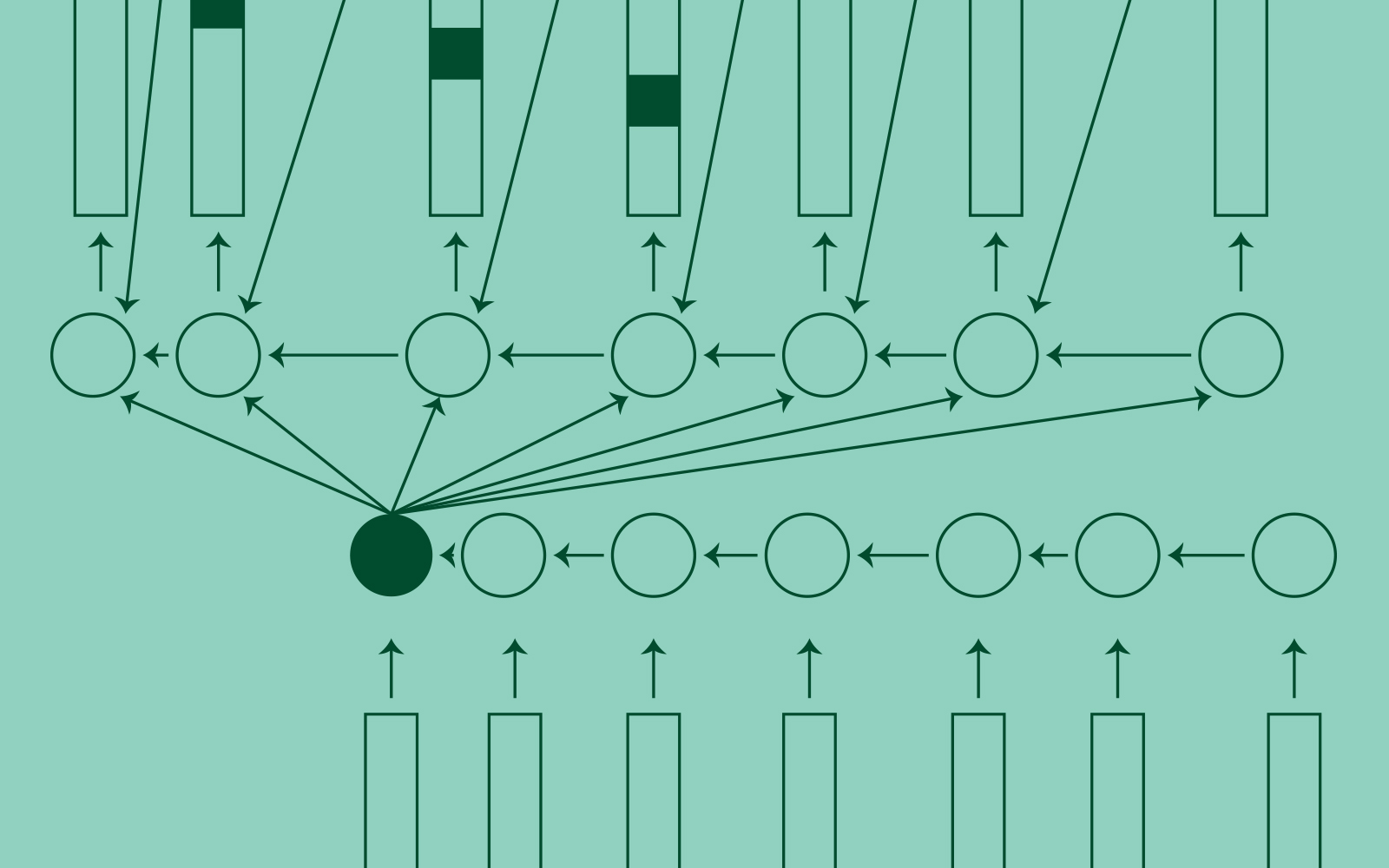

Nguyen and others led by Ai Ti Aw at A*STAR’s Institute for Infocomm Research (I2R) saw a novel solution to the challenge: upgrading NMT models such that they can be trained using ‘synthetic data.’ There are several well-established techniques for building such artificial databases, but the researchers chose to feed their original dataset into multiple forward and back translation models. The former converts text from the original to the desired language, for example, English to Chinese, and the latter from translated Chinese text back to English.

The team merged predictions produced by the forward and back translation models with the original data to create a larger, much more diverse dataset. “This effectively multiplies the size of the dataset by up to seven times,” said Nguyen, adding that, when used to train an NMT model, the new synthetic data increases translation accuracy.

The researchers showed that their data diversification approach achieved state-of-the-art scores of 30.7 for English to German and 43.7 for English to French translation tasks. These are based on a system called Bilingual Evaluation Understudy (BLEU), which scores machine-based translations from 0–100 according to how well they correlate with human translations; scores above 30 are considered good translations.

Promisingly, the team’s new method also enhanced the translation performance when it comes to Sinhala and Nepali, low-resource languages with vastly different vocabulary and grammar structures to English. Compared to the baseline NMT model, data diversification consistently increased BLEU scores by 1.0 to 2.0 points for four translation tasks: English to Sinhala, Sinhala to English, English to Nepali, and Nepali to English, achieving final scores ranging from 2.2 to 8.9.

According to Nguyen, data diversification can be applied to virtually any existing NMT model to improve translation accuracy. As a next step, the team aims to address the need to train multiple models, which can lead to consumption of more computational power and time.

The A*STAR-affiliated researchers contributing to this research are from the Institute for Infocomm Research (I2R).